Texture Generation: AI-Powered 3D Model Texturing

The Texture Generation tool provides AI-powered texture creation for 3D models, allowing you to generate high-quality materials for any 3D object. This tool works with models created within 3D AI Studio or imported from external 3D software including ZBrush, Blender, Cinema 4D, Maya, 3ds Max, and any other 3D application that can export standard file formats.

Texture Generation supports both completely new texture creation for untextured models and texture modification for models that already have existing materials. The tool can generate physically-based rendering (PBR) materials with multiple texture maps, creating professional-quality results suitable for games, visualization, and 3D printing applications.

Accessing the Texture Generation Workspace

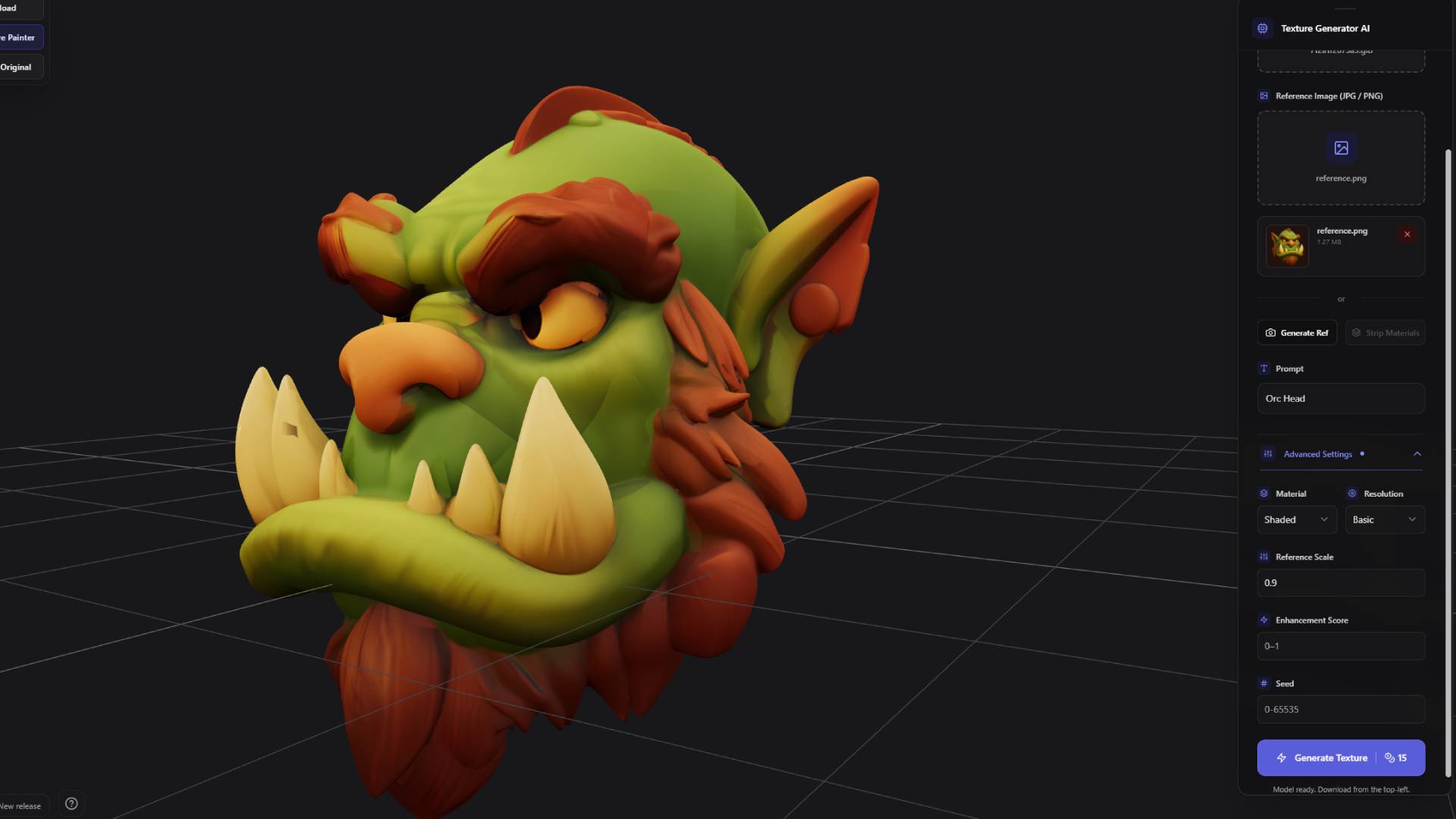

The Texture Generation tool is located in the left sidebar of your 3D AI Studio interface. Click on the "Texture Generator" tab to access the workspace. The interface consists of two main areas: a central 3D viewer that displays your uploaded model, and a settings panel on the right side that contains all the controls and options for texture generation.

This layout provides an efficient workflow where you can immediately see how texture changes affect your model while having easy access to all generation parameters. The 3D viewer supports real-time preview of generated textures, allowing you to evaluate results before finalizing your choices.

Supported File Formats and Requirements

Texture Generation accepts 3D models in three primary formats: FBX, GLB, and OBJ. Each format has specific advantages depending on your workflow and source application.

GLB Format is the preferred format for Texture Generation. GLB files provide the most reliable results and fastest processing times. The 3D viewer is optimized for GLB files and displays them with the highest fidelity. If your model is in another format, converting it to GLB before upload will ensure the best experience and most accurate texture generation results.

FBX Format offers broad compatibility with most 3D software applications. FBX files can contain complex scene hierarchies, animations, and existing materials. While FBX files work well with Texture Generation, they may require slightly longer processing times compared to GLB files.

OBJ Format provides simple, reliable geometry support that works well across different 3D applications. OBJ files are lightweight and focus on geometry data, making them suitable for models where simplicity and compatibility are priorities.

All uploaded models must be under 20 megabytes in file size. This limit ensures reasonable processing times while accommodating most practical 3D models. If your model exceeds this size limit, consider optimizing the geometry or removing unnecessary elements before upload.

Models can be uploaded with or without existing textures. If your model already has materials applied, Texture Generation can use these as a starting point for modification or enhancement. Alternatively, you can upload completely untextured models and generate entirely new materials from scratch.

Uploading Your 3D Model

To begin texture generation, locate the upload section in the settings panel on the right side of the interface. This section contains an upload button where you can select your 3D model file from your computer. Simply click the upload button and navigate to your model file, or drag and drop the file directly onto the upload area for faster access.

Once you select a file, the upload process begins automatically. Upload times depend on file size and complexity, but most models under the 20MB limit upload within a few seconds. During upload, a progress indicator shows the current status.

After successful upload, your 3D model appears in the central 3D viewer. The viewer automatically adjusts to frame your model appropriately, providing a clear view of the geometry. You can interact with the viewer using standard 3D navigation controls: click and drag to rotate the view, scroll to zoom in and out, and right-click and drag to pan the camera position.

The 3D viewer displays your model with basic lighting to show form and structure clearly. If your uploaded model contains existing textures or materials, these will be visible in the viewer. For untextured models, the viewer shows the raw geometry with a neutral material that allows you to assess the model's shape and detail level.

Reference Image System

The reference image system guides the texture generation process by providing visual examples of the desired material appearance. This system offers two approaches: uploading existing reference images or generating new reference images based on your 3D model.

Uploading Reference Images allows you to use any existing image as a material reference. This might be a photograph of a real material, artwork that captures the desired aesthetic, or images generated in other applications. The reference image upload button is located directly below the 3D model upload section in the settings panel.

When you upload a reference image, the AI analyzes the visual characteristics including color palette, texture patterns, surface properties, and lighting information. This analysis guides the texture generation process to create materials that match the visual style and properties of your reference.

Reference images work best when they clearly show the material properties you want to achieve. Close-up photographs of surfaces, clear material samples, and high-contrast images tend to produce the most accurate results. For optimal results, use reference images that show the same type of object as your 3D model - for example, when texturing an orc character, a reference image of an orc will work better than generic material samples, as the AI can better understand how to apply materials to similar objects. Avoid images with complex lighting or multiple different materials, as these can confuse the generation process.

Generating Reference Images provides an alternative when you don't have existing reference materials. This feature creates reference images based on your uploaded 3D model combined with text descriptions of your desired materials.

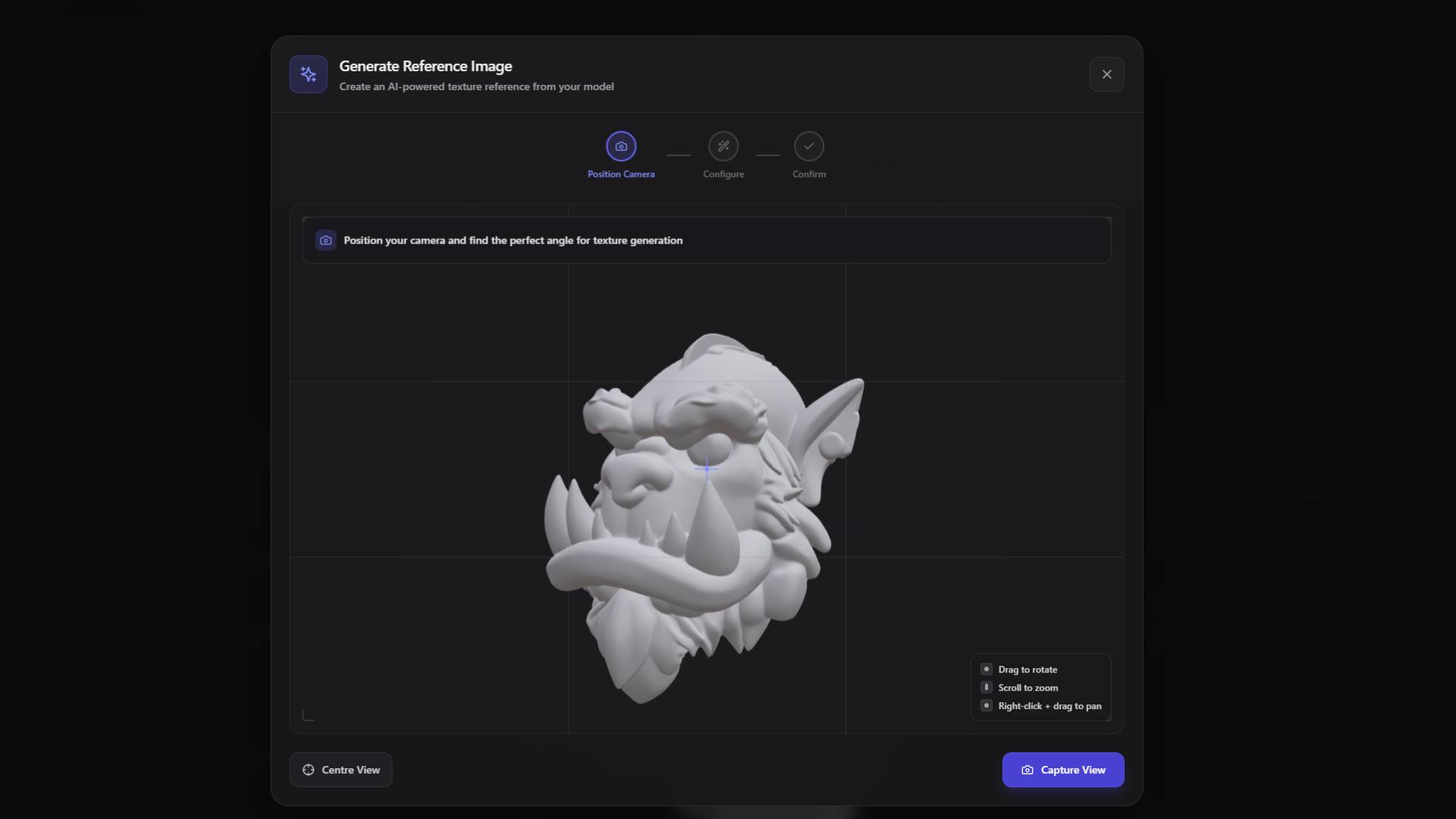

To generate a reference image, click the "Generate Ref" button located below the reference image upload area. This opens a modal window that contains its own 3D viewer with camera positioning controls.

Camera Capture for Reference Generation

The camera capture system within the reference generation modal allows you to create the perfect viewpoint of your 3D model for reference image creation. This system includes a camera finder interface that shows your model from the current camera position along with positioning controls.

Position the camera to capture your 3D model from the angle that best represents the surfaces where you want to apply textures. Consider which parts of the model will be most visible in your final application and focus the camera on those areas. The camera capture should show clear detail of the geometry and surface features that will receive the generated textures.

Use the standard 3D navigation controls within the camera finder to adjust your viewpoint. Rotate around the model to find the most informative angle, zoom to frame the model appropriately, and pan to center important features. The goal is to create a clear, well-lit view that shows the model's form and surface details.

Once you have positioned the camera to your satisfaction, click the "Capture View" button. This captures the current camera view as a static image that will be used as the base for reference image generation. The captured image shows your 3D model from the selected angle with the current lighting and framing.

AI Model Selection for Reference Generation

After capturing your camera view, you need to select which AI model to use for reference image generation. The choice depends on whether your 3D model already has existing textures and what type of modifications you want to make.

Flux Context Model is designed for models that already have existing materials or textures. This model excels at making targeted modifications to specific parts of existing textures while preserving the overall material character. Flux Context understands the relationship between different texture elements and can make coherent changes that maintain visual consistency.

Use Flux Context when you want to modify existing materials, change specific aspects of current textures, or enhance existing surface details. This model cannot generate completely new textures from scratch, so it requires some existing material information to work with.

GPT Edit Model is designed for generating entirely new textures on untextured models. This model can create complete material systems from text descriptions, generating all necessary texture maps for complex materials. GPT Edit understands material properties and can create realistic surface characteristics based on descriptive prompts.

Use GPT Edit when working with models that have no existing textures, when you want to completely replace current materials, or when you need to generate complex multi-layered materials from text descriptions.

Text Prompt System for Reference Generation

After selecting your AI model, you can provide text descriptions that guide the reference image generation process. The prompt system allows you to describe the materials, colors, surface properties, and visual characteristics you want to achieve.

Effective prompts for texture generation should focus on specific material properties rather than abstract concepts. The complexity and style of your prompts should match the AI model you selected for reference generation.

Example GPT Edit Prompts (for generating new textures on untextured models):

- "Create an orc character with green skin, red glowing eyes, and orange horns. The skin should have rough, bumpy texture with darker green patches. The horns should be smooth and glossy."

- "Generate a medieval knight armor with silver metallic finish, battle damage including scratches and dents, worn leather straps, and rust stains around the joints."

- "Design a fantasy sword with a dark steel blade, ornate golden crossguard with engravings, leather-wrapped handle, and a ruby gemstone in the pommel."

- "Create a dragon with dark red scales, lighter red belly, black wing membranes, golden spikes along the spine, and glowing yellow eyes."

Example Flux Context Prompts (for modifying existing materials):

- "Change the head color to be red"

- "Remove the scratches from the surface"

- "Make the eyes glow blue instead of yellow"

- "Add rust stains to the metal parts"

- "Change the fabric color to dark green"

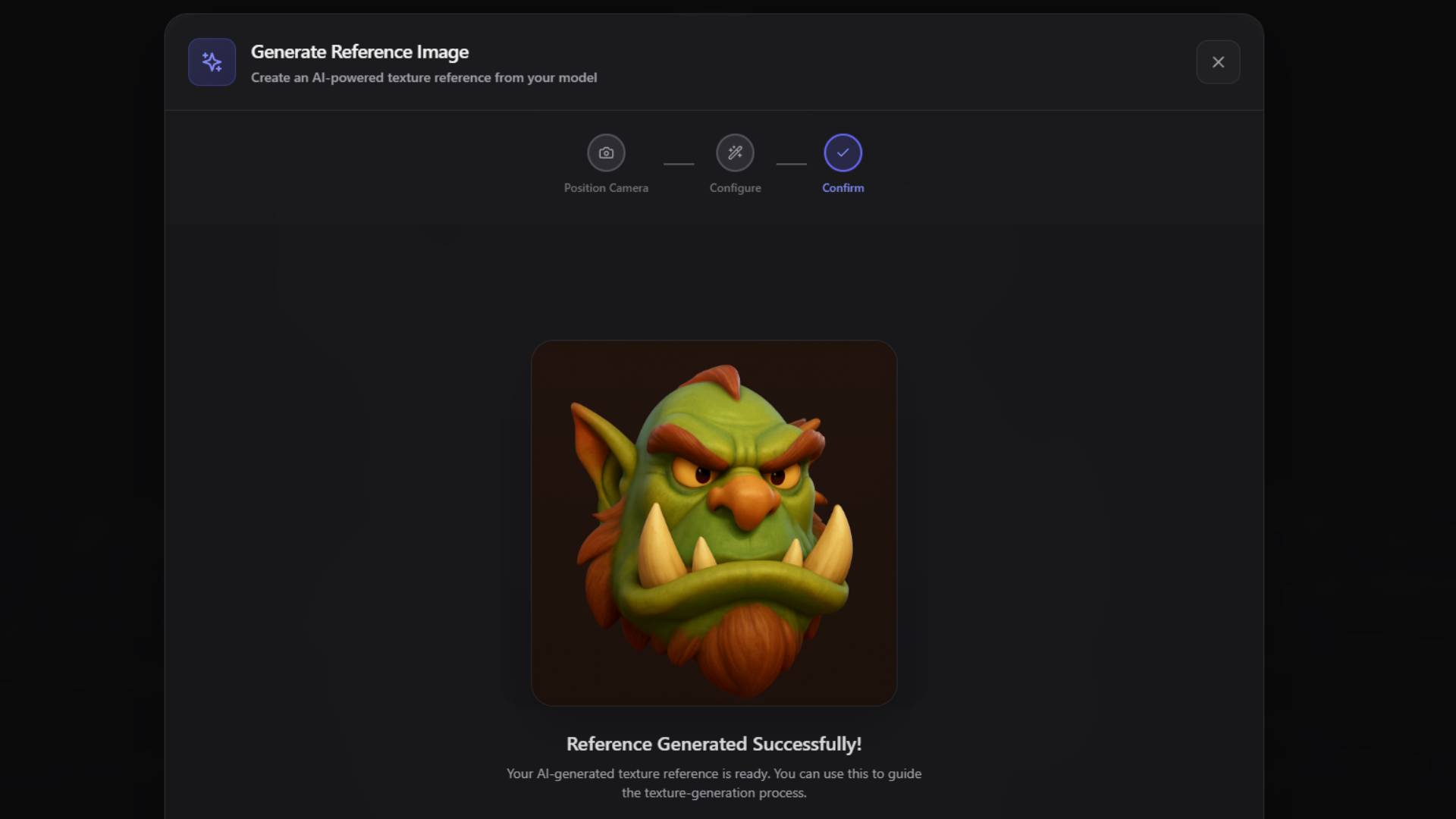

The AI uses your prompt in combination with the captured camera view to generate a reference image that shows how your 3D model might look with the described materials applied. This generated reference image then guides the actual texture generation process.

Automatic Reference Integration

After the reference image generation process completes, the newly created reference image automatically appears in the reference image upload box. This seamless integration means you don't need to manually save and re-upload the generated reference - it becomes immediately available for the texture generation workflow.

You can review the generated reference image to ensure it matches your expectations before proceeding to texture generation. If the reference doesn't capture your intended vision, you can return to the camera capture system, adjust your viewpoint or prompt, and generate a new reference image.

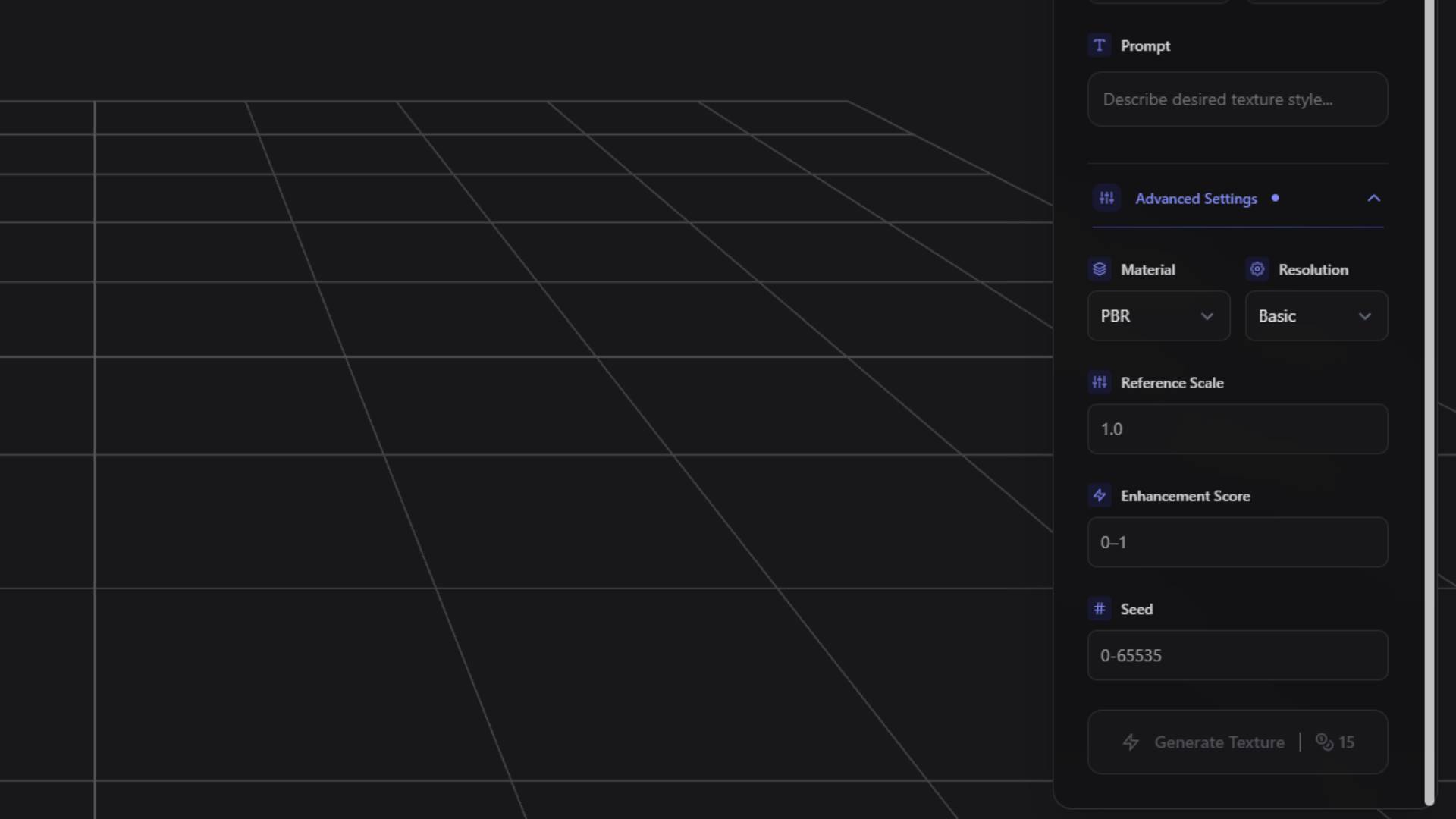

Additional Prompt Enhancement

Beyond the reference image system, Texture Generation provides an additional prompt input field that allows you to further refine and specify your texture requirements. This supplementary prompt works in conjunction with your reference image to provide more detailed guidance to the generation process.

The additional prompt can specify details that might not be clearly visible in the reference image, add context about intended use, or provide specific technical requirements for the generated textures. This extra level of control helps ensure that the final textures meet your exact specifications.

Use the additional prompt to specify material properties that are difficult to convey visually, such as specific roughness values, particular lighting behavior, or functional requirements like water resistance or wear patterns.

Advanced Generation Settings

The advanced settings section provides precise control over the technical aspects of texture generation, allowing you to optimize results for your specific application and quality requirements.

Material Type Selection determines the technical format of the generated textures. You can choose between PBR (Physically-Based Rendering) materials and basic shaded materials depending on your intended application.

PBR materials generate multiple texture maps including diffuse color, normal maps for surface detail, roughness maps for surface finish control, metallic maps for material type identification, and additional maps as needed for complex materials. PBR materials provide the highest quality and most realistic results, making them ideal for professional applications, game development, and high-end visualization.

Basic shaded materials generate simpler texture sets focused primarily on color and basic surface characteristics. These materials have smaller file sizes and faster processing times, making them suitable for applications where technical complexity is less important than quick results.

Resolution Settings control the pixel dimensions of the generated texture maps. Higher resolutions provide more detail and allow for closer viewing or larger surface applications, while lower resolutions generate faster and use less storage space.

High resolution generates textures suitable for close-up viewing, detailed work, or large surface applications. These textures provide maximum detail but require more processing time and storage space.

Basic resolution creates textures optimized for general use, distant viewing, or applications where storage space is limited. Basic resolution textures generate quickly and work well for most standard applications.

Reference Scale controls how closely the generated texture follows the reference image. Higher reference scale values create textures that match the reference more precisely, while lower values allow for more creative interpretation and adaptation to the 3D model's specific geometry.

Adjust reference scale based on how exactly you want the final texture to match your reference. Use higher values when you have a specific material in mind and want precise replication. Use lower values when you want the reference to serve as inspiration while allowing the AI to adapt the material appropriately for your specific 3D model.

Enhancement Score determines the level of detail enhancement applied during texture generation. Higher enhancement scores create more detailed, complex textures with additional surface features and fine details. Lower enhancement scores produce cleaner, simpler textures with more uniform appearance.

Consider your intended viewing distance and application when setting enhancement scores. High enhancement works well for close-up viewing or hero objects, while lower enhancement suits background objects or distant viewing scenarios.

Seed Value provides reproducibility in texture generation. Using the same seed value with identical settings will produce consistent results, allowing you to make incremental adjustments to other parameters while maintaining overall texture character.

Record seed values when you achieve results you like, as this allows you to generate variations or make modifications while preserving successful texture characteristics.

Texture Generation Process

Once you have configured all settings, uploaded your model, and prepared your reference materials, click the "Generate Texture" button to begin the AI texture generation process. The generation typically takes approximately one minute, though times can vary based on model complexity, resolution settings, and current system load.

During generation, the interface provides progress indicators and status updates. The system processes your 3D model geometry, analyzes the reference image, applies the specified prompts, and generates the complete texture set according to your advanced settings.

The generation process creates all necessary texture maps for your chosen material type. For PBR materials, this includes diffuse color maps, normal maps for surface detail, roughness maps for finish control, metallic maps for material classification, and any additional specialized maps required for complex materials.

Results and 3D Preview

After texture generation completes, your newly textured 3D model appears in the central 3D viewer with all generated materials applied. The viewer updates automatically to show the textured result, allowing you to immediately evaluate the quality and appearance of the generated textures.

Use the 3D viewer controls to examine your textured model from different angles and lighting conditions. The viewer provides realistic lighting that shows how the generated materials interact with light sources, helping you assess the success of the generation process.

The textured model in the viewer represents the final result that will be available for download. All texture maps are properly applied and the model is ready for use in your target application.

Download and Integration

Upon completion of texture generation, your fully textured model becomes available in your Dashboard. Navigate to your Dashboard to access the completed model along with all generated texture files.

From the Dashboard, you can download the complete textured model in various formats suitable for different applications. The download includes both the 3D geometry and all generated texture maps, providing everything needed to use the model in external applications.

The Dashboard also provides access to post-processing tools that can further enhance your textured model. These tools include remesh options for geometry optimization, additional texture adjustments, and format conversion utilities.

Integration with 3D AI Studio Workflow

Texture Generation integrates seamlessly with other 3D AI Studio tools and workflows. Models created using Text to 3D or Image to 3D can be directly processed through Texture Generation without requiring external software or format conversions.

This integration allows for complete 3D creation workflows entirely within 3D AI Studio. You can generate a 3D model, apply AI-generated textures, perform any necessary post-processing, and prepare final assets all within the same platform.

For models created in external 3D software, Texture Generation provides a bridge that brings AI-powered texturing capabilities to your existing workflows. Generate textures within 3D AI Studio and then export the results back to your preferred 3D application for final scene assembly and rendering.

Best Practices and Optimization Tips

Successful texture generation depends on several factors that you can control to achieve optimal results. Understanding these factors helps you consistently create high-quality textured models.

Model Preparation significantly impacts texture generation quality. Ensure your 3D models have clean geometry with appropriate polygon density. Avoid extremely high-poly models that exceed the 20MB limit, but maintain sufficient detail to support the textures you want to generate.

If your model has existing UV mapping, ensure the UV coordinates fall within the standard 0-1 range. Well-organized UV layouts produce better texture generation results than overlapping or poorly arranged UV maps.

Reference Image Quality directly affects generation results. Use high-quality reference images that clearly show the material properties you want to achieve. Avoid images with complex lighting, multiple materials, or unclear surface details.

When generating reference images using the camera capture system, position the camera to show your model clearly without dramatic shadows or extreme angles that might confuse the generation process.

Prompt Specificity helps achieve more accurate results. Use specific, descriptive language that focuses on material properties rather than abstract concepts. Include details about surface finish, color characteristics, texture patterns, and material behavior.

Settings Optimization should match your intended application. Use high resolution and PBR materials for professional applications requiring close-up viewing. Choose basic resolution and simpler materials for background objects or applications where file size is a concern.

Texture Generation provides powerful AI-driven capabilities that democratize high-quality 3D texturing, making professional-level results accessible regardless of your texturing experience or available time. The combination of flexible input options, intelligent AI processing, and seamless integration with 3D AI Studio workflows creates an efficient path from concept to finished textured 3D models.

Ready to begin texturing your 3D models? Navigate to the Texture Generator in your sidebar, or return to the 3D AI Studio Dashboard (opens in a new tab) to access your existing projects and start applying AI-generated textures to enhance your 3D creations.