Text to 3D: Complete Generation Guide

Text to 3D generation transforms written descriptions into three-dimensional models through artificial intelligence. This comprehensive guide covers everything you need to know about using 3D AI Studio's Text to 3D capabilities, from basic navigation to advanced model configuration and optimization techniques.

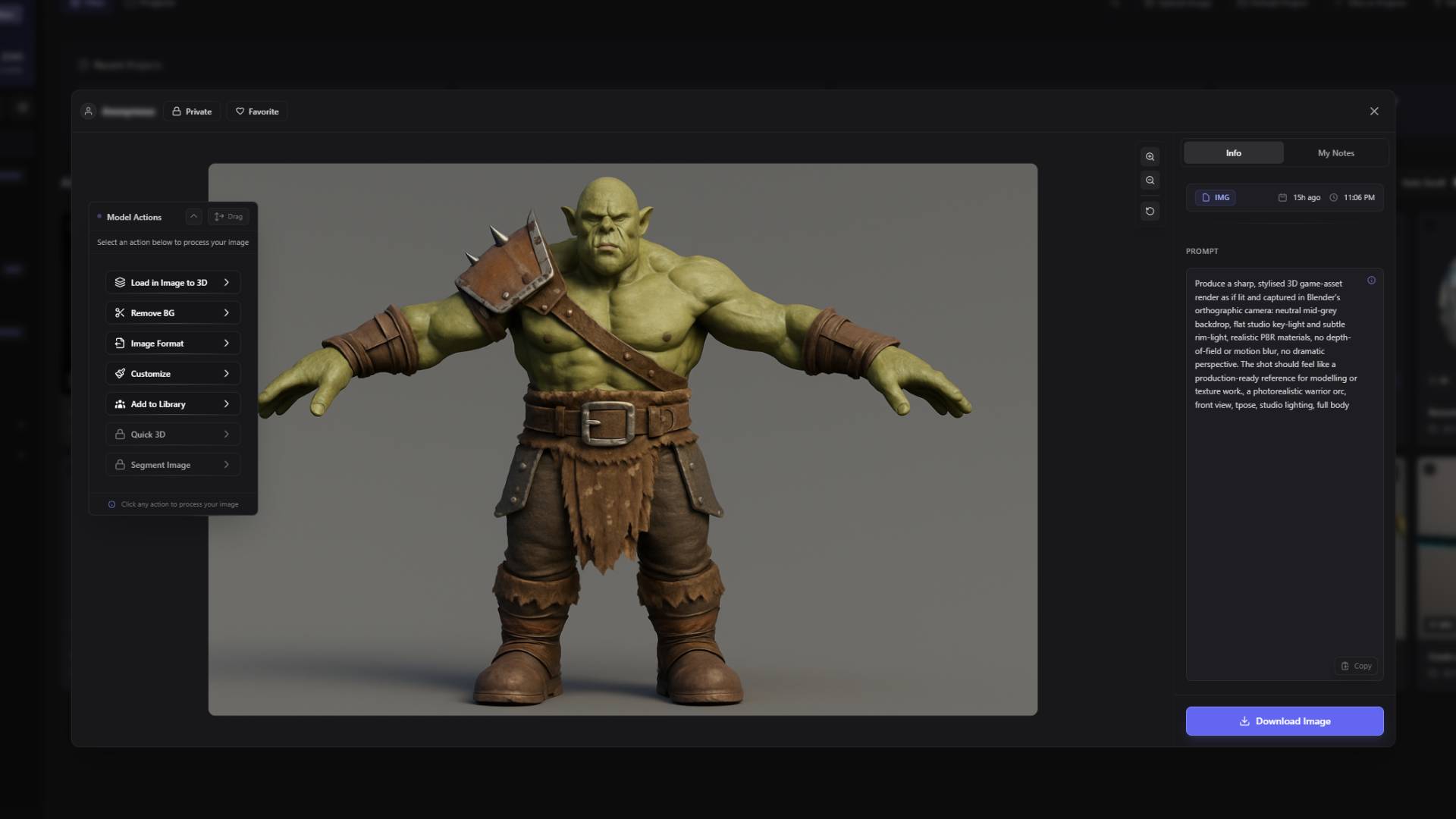

Recommended Workflow: Image Studio First

For optimal results and efficient credit usage, we recommend starting with Image Studio rather than direct Text to 3D generation. This workflow provides significantly more control over your final output while using fewer credits.

The process involves generating your desired image using the same text prompt in Image Studio, then editing and perfecting that image until it matches your exact vision. Once you're satisfied with the 2D result, use Image to 3D to convert your perfected image into a 3D model.

This approach allows you to refine visual details, adjust compositions, and ensure accuracy before committing to the more resource-intensive 3D generation process. Since image generation and editing consume fewer credits than 3D generation, you can iterate freely in 2D and only use 3D generation once you've achieved the perfect reference image.

Text to 3D remains valuable for rapid prototyping and conceptual exploration when you need quick results without detailed refinement.

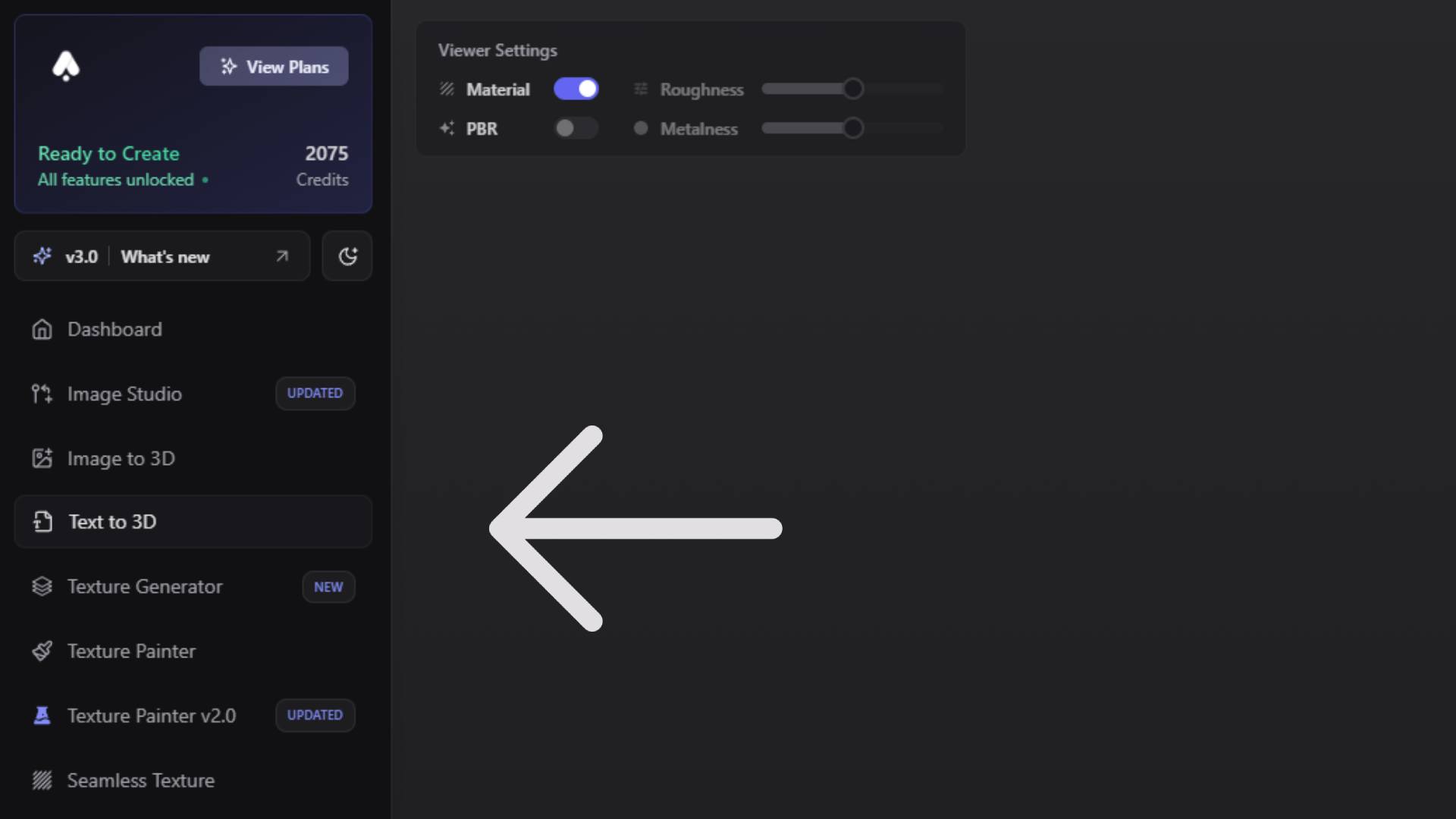

Accessing the Text to 3D Workspace

Getting started with Text to 3D begins with navigation through the 3D AI Studio interface. The Text to 3D tool is accessible through the left sidebar navigation, which serves as your primary navigation point throughout the platform. When you click on "Text to 3D" in the sidebar, you'll be presented with a comprehensive workspace designed specifically for text-based 3D model generation.

The workspace layout is intuitive and organized to support efficient creative workflows. The center of the interface features a prominent 3D viewer that displays your generated models in real-time, allowing you to examine your creations from every angle as they're processed. This central viewing area is where you'll spend most of your time evaluating and refining your generated models.

The interface design prioritizes functionality while maintaining visual clarity. Every element is positioned to minimize the distance between related controls and viewing areas, reducing the cognitive load required to operate the system effectively. This thoughtful design approach enables you to focus on the creative aspects of 3D generation rather than struggling with interface complexity.

Understanding the 3D Viewer and Display Controls

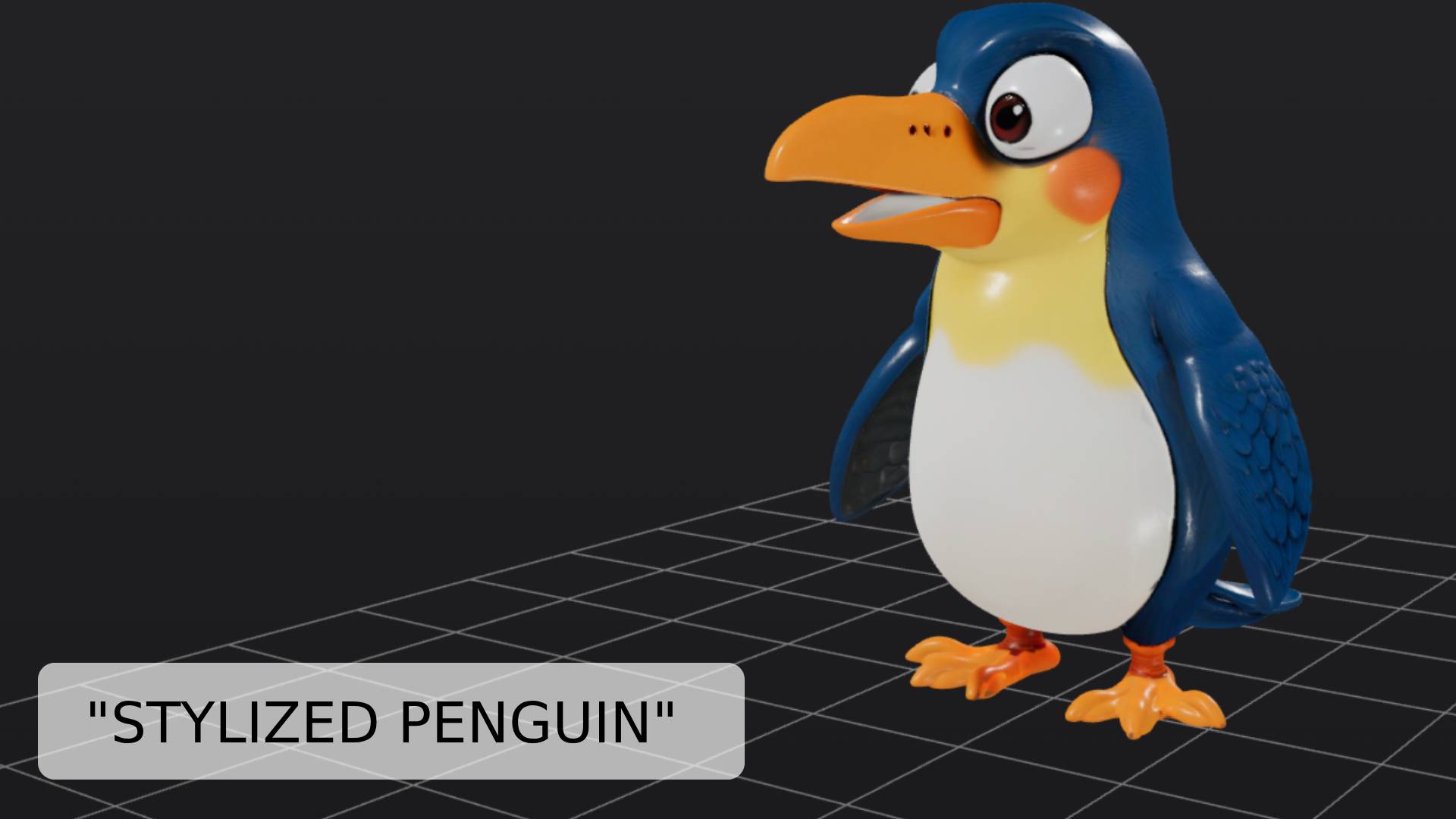

The 3D viewer at the center of the Text to 3D workspace provides sophisticated visualization capabilities that go far beyond basic model display. This advanced viewing system includes comprehensive material visualization options that help you understand exactly how your generated models will appear under different rendering conditions and in various applications.

The viewer settings give you precise control over how materials are displayed and interpreted. You can toggle between different material visualization modes, including basic shaded display and full PBR (Physically Based Rendering) preview. The PBR preview mode shows materials with realistic lighting interactions, surface reflections, and material properties that closely approximate how the model will appear in professional 3D applications and game engines.

Fine-tuning material appearance involves adjusting roughness and metalness values directly within the viewer. Roughness controls how smooth or textured surfaces appear under lighting, with lower values creating mirror-like surfaces and higher values producing more matte appearances. Metalness determines whether surfaces behave like metals or non-metallic materials, affecting how light reflects and interacts with the surface. These controls provide immediate visual feedback, allowing you to understand how different material properties will affect the final appearance of your model.

The rotation control system offers both automatic and manual viewing options. When automatic rotation is enabled, generated models slowly rotate in the viewer, providing a comprehensive view of all surface details and angles without requiring manual interaction. This automatic rotation is particularly useful when evaluating model quality and identifying areas that might need refinement. You can disable automatic rotation when you need to examine specific details or angles more carefully, giving you full manual control over the viewing perspective.

Project organization capabilities within the viewer interface include the ability to set a default project destination. When you configure a default project, all future Text to 3D generations automatically organize into that project folder, eliminating the need for manual sorting and ensuring that related models remain grouped together. This automated organization becomes increasingly valuable as you develop larger collections of generated content and need to maintain clear project separation.

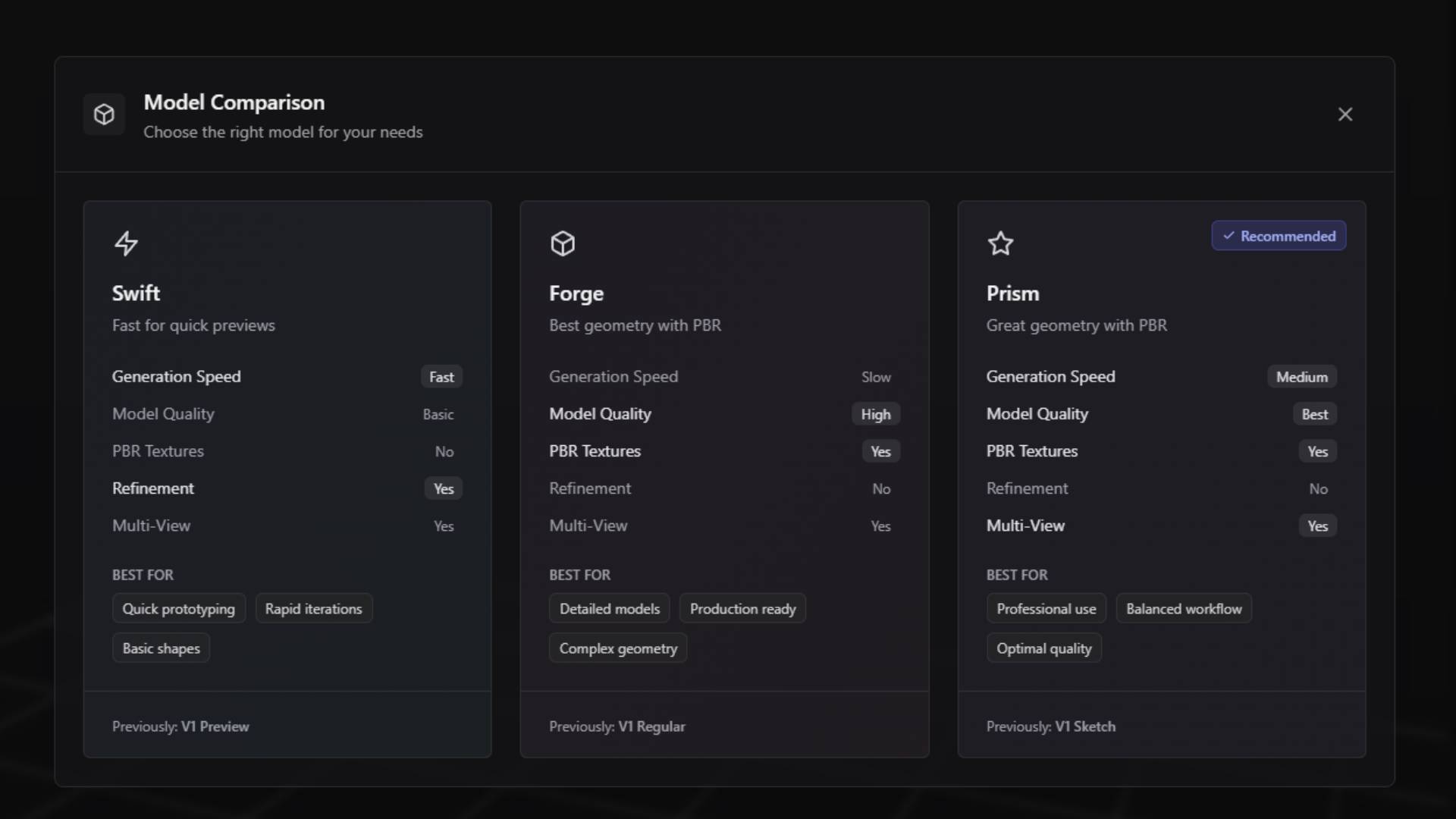

Model Selection: Understanding Swift, Forge, and Prism

The Text to 3D system offers three distinct AI models, each optimized for different use cases and quality requirements. Understanding the characteristics and appropriate applications for each model enables you to select the optimal generation approach for your specific project needs and time constraints.

The Swift model prioritizes generation speed over output quality, making it ideal for rapid prototyping and iterative design exploration. Swift generation typically completes within seconds, allowing you to quickly test multiple concepts and prompt variations without significant time investment. The model quality from Swift is intentionally basic, designed to provide quick visual confirmation of general shape and form concepts rather than detailed, production-ready results.

Swift excels in scenarios where you need to rapidly evaluate multiple design directions or test how different prompt variations affect overall model structure. The basic quality output provides sufficient detail to understand proportions, general shape characteristics, and overall design feasibility without the computational overhead required for high-quality results. This makes Swift particularly valuable during the conceptual phases of projects where speed of iteration outweighs final output quality.

The model works best with straightforward geometric shapes and clear, simple descriptions. Complex organic forms, intricate details, and sophisticated material requirements often exceed Swift's capabilities, but for basic architectural elements, simple mechanical parts, and geometric primitives, Swift provides adequate results in minimal time.

The Forge model represents a significant step up in both quality and generation time. Forge produces super high model quality with sophisticated geometric detail and comprehensive material support, including full PBR texture generation. The generation process takes considerably longer than Swift, but the resulting models meet professional quality standards suitable for commercial applications.

Forge includes multi-view generation capabilities, although this feature doesn't significantly impact text-to-3D workflows since multi-view generation is primarily designed for image-based model creation. However, the underlying technology that enables multi-view processing contributes to Forge's ability to generate geometrically consistent and well-proportioned models from text descriptions.

The model excels at creating detailed organic forms, complex mechanical assemblies, and architecturally accurate structures. Material generation through Forge produces realistic surface textures with appropriate roughness, metalness, and color values that work seamlessly with professional 3D applications and game engines. The quality level makes Forge-generated models suitable for close-up visualization, detailed product presentations, and high-end rendering applications.

The Prism model offers the highest quality output with slightly faster generation times compared to Forge, representing the optimal balance between quality and efficiency for most professional applications. Prism generates super high model quality with comprehensive PBR texture support and includes the same multi-view capabilities as Forge, ensuring geometric accuracy and visual consistency.

Prism's advanced processing algorithms produce models with exceptional surface detail, accurate proportions, and sophisticated material interpretation. The model demonstrates particular strength in understanding complex prompt descriptions and translating nuanced requirements into appropriate geometric and material characteristics. This makes Prism especially valuable for projects requiring precise adherence to detailed specifications or sophisticated aesthetic requirements.

The generation speed advantage over Forge, while maintaining equivalent quality levels, makes Prism the preferred choice for most professional workflows where both quality and efficiency matter. The model handles complex organic forms, detailed mechanical components, and sophisticated architectural elements with equal proficiency, producing results that meet the highest standards for commercial and artistic applications.

Swift Model Configuration and Usage

Working with the Swift model involves a streamlined configuration process designed to maximize generation speed while providing essential creative control. The settings interface for Swift reflects its focus on rapid iteration and basic quality output, presenting only the most fundamental parameters needed for effective text-to-3D generation.

The primary input for Swift generation is the text prompt, where you describe the object you want to create. Swift responds well to clear, direct descriptions that focus on overall shape, basic proportions, and general characteristics rather than intricate details. Effective Swift prompts typically include the primary object type, basic size or scale references, and fundamental shape characteristics.

The negative prompt capability allows you to specify elements that should be avoided or excluded from the generation. This control mechanism helps refine results by eliminating unwanted characteristics that might otherwise appear in the generated model. Effective negative prompts for Swift include common problematic elements like unwanted appendages, inappropriate materials, or geometric distortions that frequently occur with rapid generation processes.

Since Swift prioritizes speed over complexity, the prompt interpretation focuses on primary elements rather than subtle details. Complex material descriptions, intricate surface patterns, or sophisticated lighting requirements often get simplified or ignored during Swift generation. Understanding these limitations helps you craft prompts that work within Swift's capabilities while achieving useful results for prototyping and concept development.

The rapid generation cycle of Swift makes it ideal for prompt experimentation and refinement. You can quickly test multiple variations of similar prompts to understand how different word choices and descriptions affect the generated results. This rapid feedback loop enables efficient prompt engineering and helps you develop more effective descriptions for use with higher-quality models.

Forge Model Advanced Configuration

The Forge model provides sophisticated configuration options that enable precise control over generation quality, material properties, and output characteristics. These advanced settings reflect Forge's position as a professional-grade generation tool designed for users who need reliable, high-quality results suitable for commercial applications.

Prompt input for Forge supports more complex and nuanced descriptions compared to Swift. The model's advanced processing capabilities enable interpretation of detailed material specifications, sophisticated geometric requirements, and complex spatial relationships. Effective Forge prompts can include specific material types, surface treatments, proportional relationships, and aesthetic style references.

The material rendering choice between shaded and PBR affects both generation processing and final output characteristics. Shaded mode produces models with simplified material representation suitable for basic visualization and general-purpose applications. PBR mode generates full physically-based materials with appropriate texture maps, metalness values, roughness characteristics, and color information that work seamlessly with professional rendering engines and game development pipelines.

Quality settings provide granular control over generation resolution and detail levels. The high-quality setting produces maximum detail and geometric accuracy but requires the longest processing time. Medium quality offers a balanced approach suitable for most applications, while low and extra-low settings trade detail for faster processing speeds. Understanding the relationship between quality settings and intended application helps you optimize generation time while achieving appropriate results.

The seed parameter enables reproducible generation results, allowing you to generate variations of the same base model or return to specific successful generations. When you find a generation result that closely matches your requirements, noting the seed value enables you to generate similar models with slight prompt modifications while maintaining consistent base characteristics.

Bounding box functionality provides dimensional control over generated models, enabling you to specify the maximum size constraints for the resulting geometry. This feature is particularly valuable when generating models that need to fit specific spatial requirements or maintain consistent scaling relationships with other elements in your project. The bounding box parameters accept real-world measurements, ensuring that generated models meet precise dimensional specifications.

The AOT pose option specifically addresses human-like model generation by providing specialized processing algorithms designed for organic forms and character-based objects. When enabled, AOT pose processing improves the anatomical accuracy and natural positioning of human and animal figures, reducing common issues like unnatural limb positioning or proportion inconsistencies that can occur with general-purpose geometric processing.

Prism Model Professional Configuration

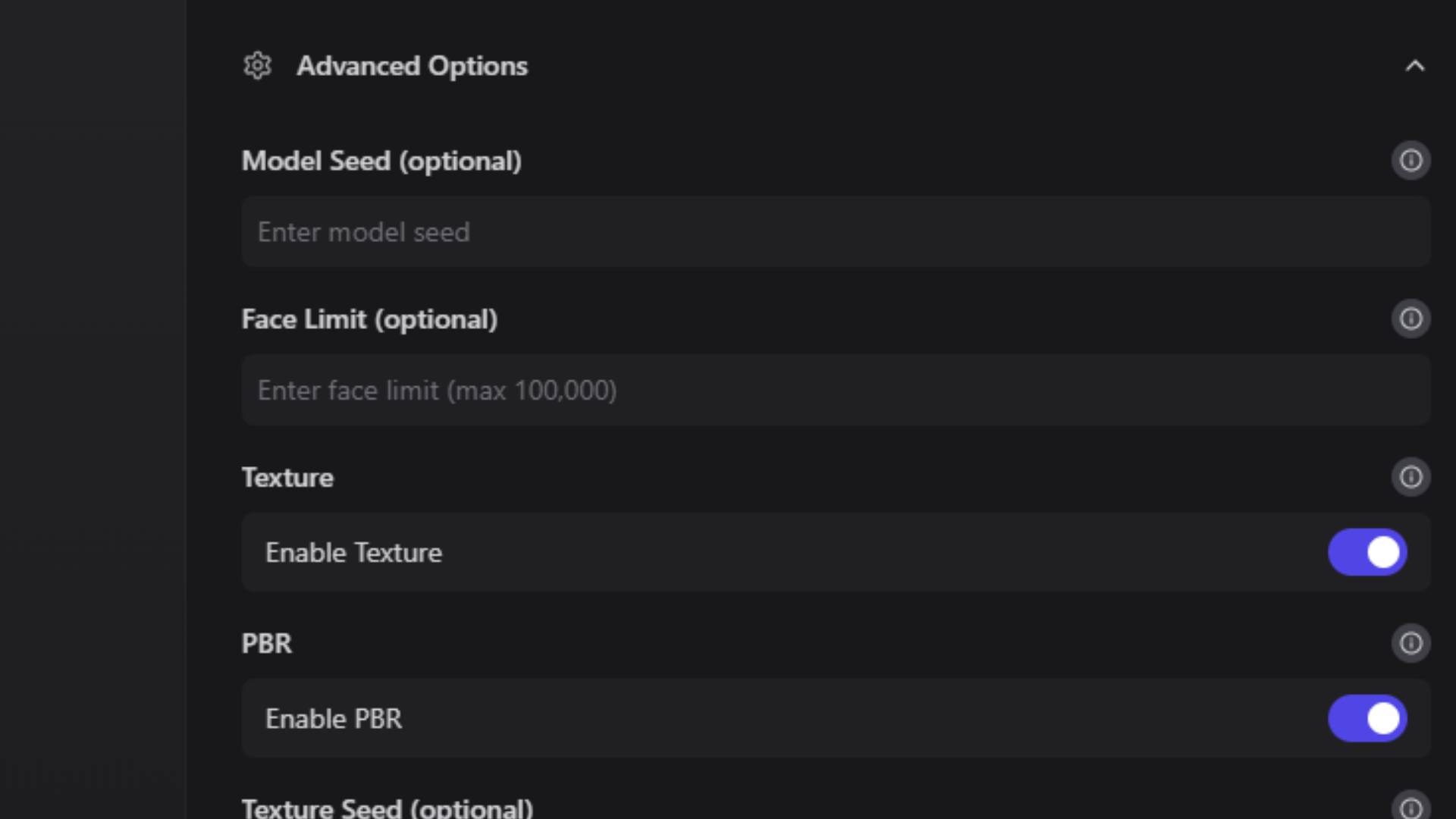

Prism model configuration provides the most comprehensive set of advanced settings available in the Text to 3D system, reflecting its position as the premium generation option for users requiring maximum quality and precise control over output characteristics. The extensive configuration options enable fine-tuning of virtually every aspect of the generation process.

Prompt processing in Prism incorporates sophisticated natural language understanding that can interpret complex, multi-layered descriptions with exceptional accuracy. The model excels at understanding contextual relationships between different prompt elements, enabling descriptions that include multiple objects, complex spatial arrangements, and sophisticated material combinations within a single generation request.

The model seed parameter in Prism provides enhanced control over generation consistency and variation management. Unlike simpler models, Prism's seed system enables fine-grained control over different aspects of the generation process, allowing you to maintain consistency in overall structure while varying specific details or material characteristics. This sophisticated seed system supports advanced workflows where maintaining design coherence across multiple related models is essential.

Face limit controls provide specialized processing optimization for models containing detailed facial features or complex organic surfaces. This parameter balances geometric detail against processing requirements, enabling you to specify the maximum complexity level appropriate for your intended application. Higher face limits produce more detailed surfaces but require increased processing time and result in larger file sizes.

Texture generation controls offer comprehensive management of surface detail creation and application. The enable texture option determines whether the model receives detailed surface textures or relies on basic material colors. When texture generation is enabled, the system creates appropriate surface patterns, wear characteristics, and material-specific details that enhance visual realism and surface interest.

PBR material generation produces complete physically-based material definitions including diffuse color maps, normal maps for surface detail, roughness maps for surface finish characteristics, and metallic maps for material type identification. These comprehensive material definitions ensure that Prism-generated models integrate seamlessly with professional rendering workflows and maintain visual consistency across different lighting environments and applications.

The texture seed parameter provides independent control over surface pattern generation, enabling you to maintain consistent geometric characteristics while varying surface treatments. This separation of geometric and texture generation enables sophisticated design exploration where you can evaluate different surface approaches for the same base model without regenerating the entire geometry.

Texture quality settings optimize the resolution and detail level of generated surface textures based on intended application requirements. High-quality textures provide maximum detail suitable for close-up visualization and high-resolution rendering, while lower quality settings reduce file sizes and processing requirements for applications where texture detail is less critical.

Auto size functionality automatically scales generated models to real-world dimensions based on the prompt description and object type recognition. This intelligent scaling ensures that generated furniture appears at furniture scale, architectural elements maintain appropriate proportions, and mechanical components reflect realistic sizing relationships. The auto size system reduces the manual scaling work required to integrate generated models into real-world applications and architectural visualizations.

Workflow Recommendations and Best Practices

While Text to 3D provides powerful direct generation capabilities, understanding optimal workflow approaches can significantly improve your results and creative efficiency. The most effective approach for many projects involves combining Text to 3D with other 3D AI Studio tools to leverage the strengths of each system while minimizing their individual limitations.

The recommended workflow for achieving maximum control and quality begins with image generation in the Image Studio. Starting with Image Studio allows you to create detailed visual references that precisely capture your design intent, aesthetic preferences, and specific details that might be difficult to describe accurately in text form. Image Studio provides comprehensive editing capabilities that enable refinement and perfection of visual concepts before committing to 3D generation.

After creating and perfecting your reference image in Image Studio, the Image to 3D system provides more precise control over the generation process compared to text-only descriptions. Image to 3D can analyze visual details, understand spatial relationships, and interpret material characteristics directly from the reference image, often producing more accurate results than text descriptions alone.

This two-step workflow leverages the strengths of both systems while providing multiple opportunities for refinement and iteration. Image Studio excels at rapid visual iteration and detailed editing, while Image to 3D provides sophisticated analysis of visual information and accurate translation into three-dimensional form. The combination delivers superior results compared to either system used independently.

Text to 3D remains valuable for rapid prototyping, conceptual exploration, and situations where you need to quickly generate basic geometric forms for further development. The speed and simplicity of text-based generation make it ideal for exploring multiple design directions, testing prompt effectiveness, and developing initial concepts that can be refined through other tools.

Understanding when to use Text to 3D directly versus when to employ the Image Studio workflow depends on project requirements, quality expectations, and available time. Text to 3D works well for geometric objects with clear, simple characteristics, basic architectural elements, and situations where general form is more important than specific details. The Image Studio workflow provides superior results for complex organic forms, detailed mechanical assemblies, and projects requiring precise aesthetic control.

Effective prompt engineering for Text to 3D involves understanding how different models interpret language and respond to various description approaches. Clear, specific language generally produces better results than vague or overly complex descriptions. Including material specifications, scale references, and basic proportion information helps the AI systems generate more accurate interpretations of your requirements.

Testing and iteration remain essential components of successful Text to 3D workflows. The rapid generation capabilities of the Swift model make it ideal for testing prompt variations and understanding how different description approaches affect results. Once you identify effective prompt structures, you can apply them to higher-quality models for final production.

Advanced Generation Techniques and Optimization

Mastering Text to 3D generation involves understanding advanced techniques that go beyond basic prompt entry and model selection. These sophisticated approaches enable you to achieve consistent, high-quality results while minimizing generation time and maximizing creative control over the output characteristics.

Prompt engineering represents one of the most critical skills for effective Text to 3D generation. Advanced prompt construction involves layering different types of descriptive information in specific orders that optimize AI interpretation. Primary object identification should appear early in the prompt, followed by material specifications, then proportional relationships, and finally aesthetic or stylistic modifiers.

Understanding model-specific prompt interpretation differences enables you to optimize descriptions for each generation system. Swift responds best to simple, direct language focusing on primary characteristics. Forge and Prism can interpret more complex descriptions but benefit from structured approaches that separate geometric requirements from material specifications and aesthetic preferences.

Seed management strategies enable consistent results across multiple generation sessions and provide controlled variation capabilities. Recording successful seed values along with their corresponding prompts creates a library of reliable generation parameters that can be modified for related projects. This systematic approach reduces the trial-and-error typically associated with AI generation while building expertise in prompt effectiveness.

Quality optimization involves understanding the relationship between generation settings, processing time, and intended application requirements. Matching quality levels to specific use cases prevents over-processing for applications where maximum detail isn't necessary while ensuring adequate quality for demanding applications. This strategic approach to quality selection improves workflow efficiency and resource utilization.

Material specification techniques involve understanding how different models interpret material descriptions and translate them into appropriate surface characteristics. Effective material prompts include specific material types, surface treatments, wear characteristics, and finish qualities that provide clear guidance for AI interpretation. Understanding the vocabulary that produces consistent material results enables predictable surface generation across different models and projects.

Dimensional control strategies utilize bounding box settings, auto-sizing capabilities, and scale references within prompts to ensure generated models meet specific size requirements. Effective dimensional control reduces post-generation scaling work while ensuring that models integrate properly with existing project elements and real-world applications.

The Text to 3D system provides powerful capabilities for rapid 3D model generation directly from text descriptions, supporting everything from quick conceptual prototypes to professional-quality finished models. Understanding the characteristics and optimal applications for each model type, combined with effective prompt engineering and workflow integration, enables you to leverage these capabilities effectively for your specific creative and commercial requirements.

Visit the 3D AI Studio Dashboard (opens in a new tab) to begin your Text to 3D generation projects, or explore Image Studio to learn about the recommended workflow approach that combines image generation with 3D conversion for maximum creative control.