Image to 3D: Complete Generation Guide

Image to 3D conversion turns two-dimensional pictures into fully textured three-dimensional models. This guide walks through every aspect of the Image to 3D workspace, explains how the different AI models interpret your images, and shows recommended workflows for achieving the highest level of creative control. The goal is to give you enough context to feel comfortable whether you are uploading a single photograph, combining multiple reference images, or processing an entire batch in one go.

Accessing the Image to 3D Workspace

The Image to 3D tool sits in the same left-hand sidebar that holds the rest of 3D AI Studio's creative features. A single click on the Image to 3D label opens a familiar layout that mirrors the Text to 3D interface, so if you have already experimented with text-based generation everything will feel instantly recognizable. The centre of the screen is dominated by the real-time 3D viewer, the right side holds all model and generation settings, and the lower area displays status information as your model is processed.

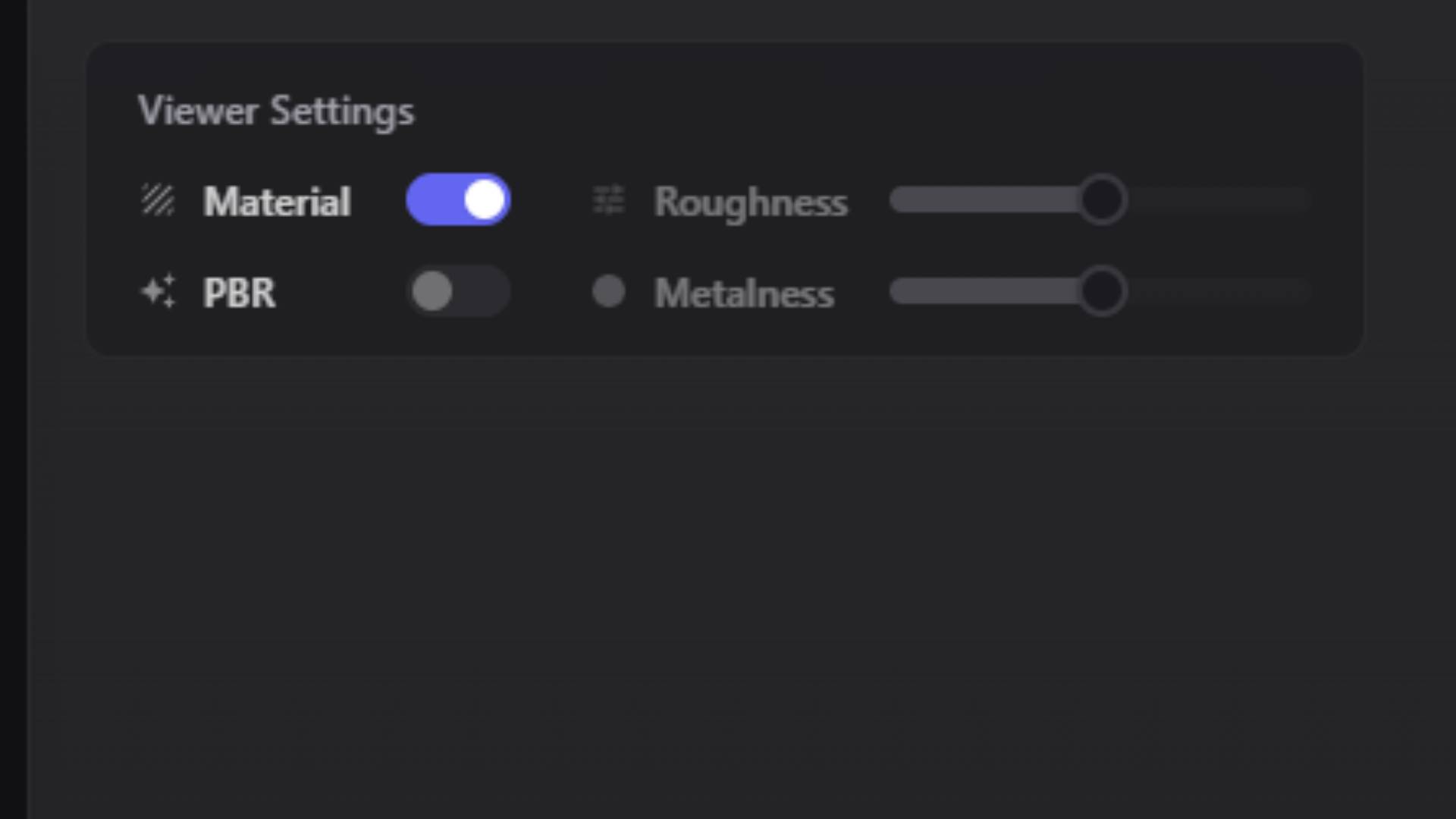

Understanding the 3D Viewer and Display Controls

The central viewer behaves exactly like its counterpart in Text to 3D, delivering real-time feedback as soon as a model finishes rendering. You can switch between a basic shaded display and a full PBR preview that honours roughness and metalness values. A pair of simple sliders lets you nudge those material parameters until the surface finish matches your creative intent. Rotation can be left on for an effortless turntable view, or switched off whenever you need to inspect details from a fixed angle. Setting a default project is as straightforward as choosing a folder name in the dropdown beneath the viewer. Once selected, every future Image to 3D generation drops into that project automatically, keeping libraries organised without manual sorting.

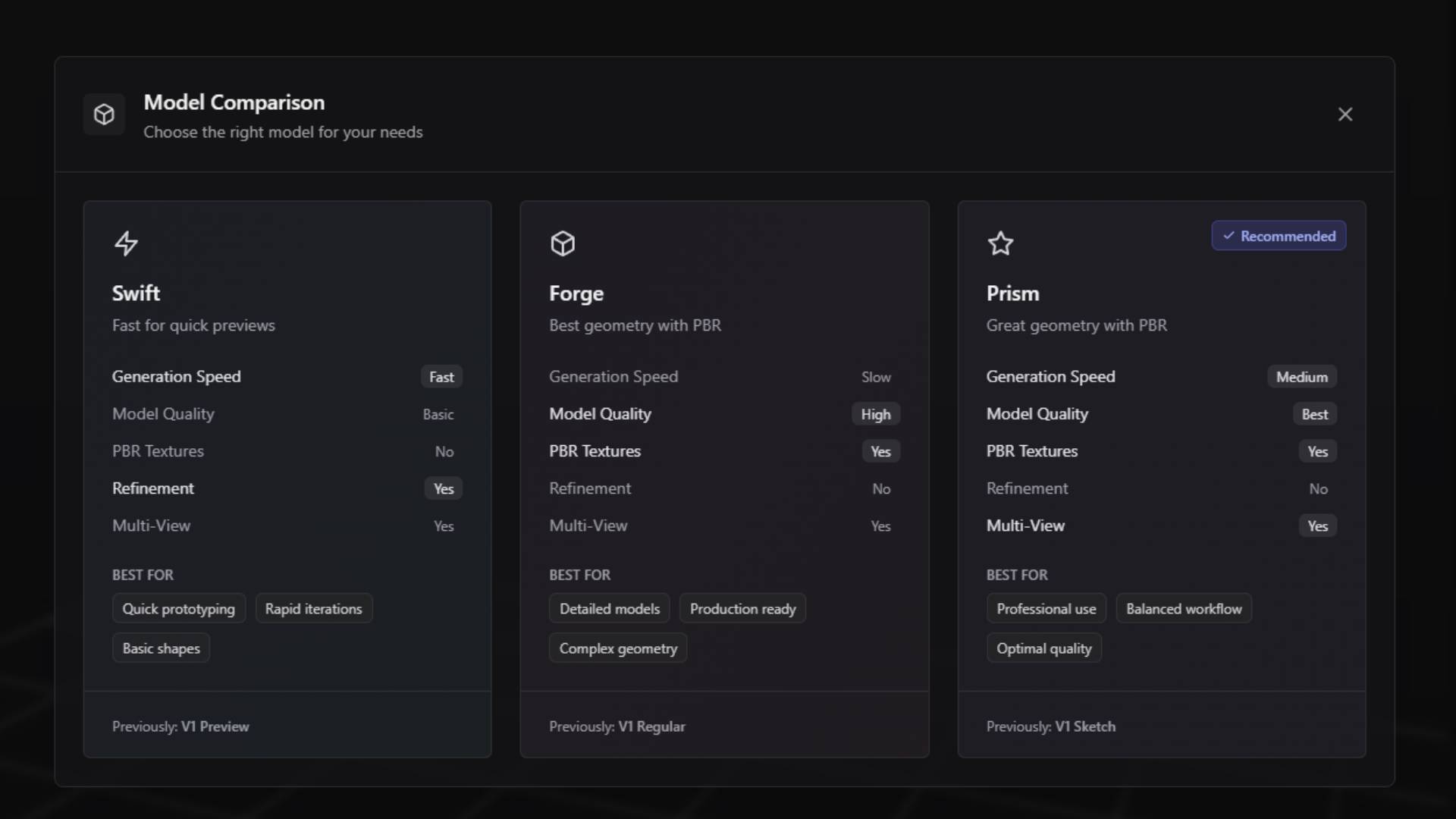

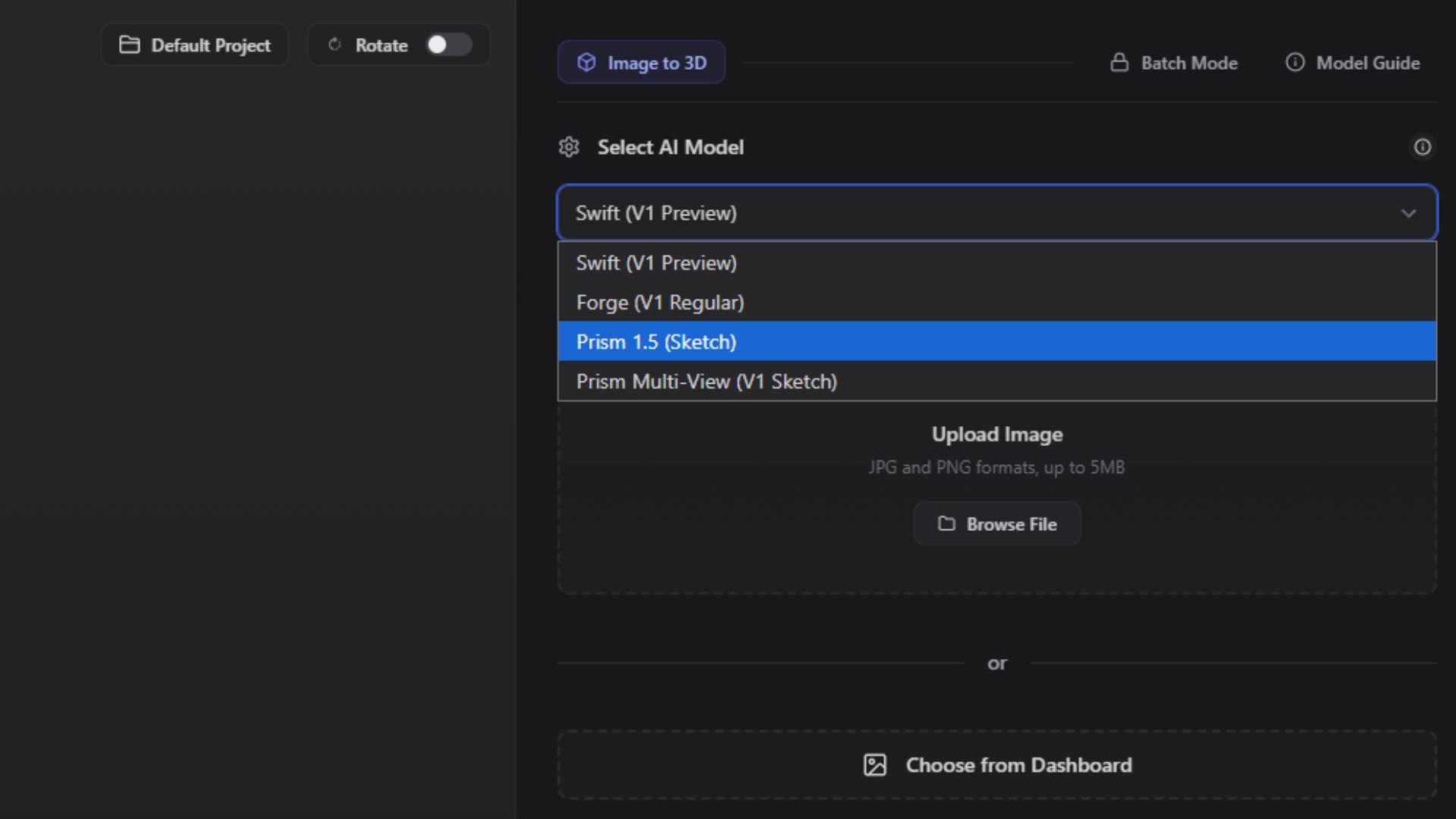

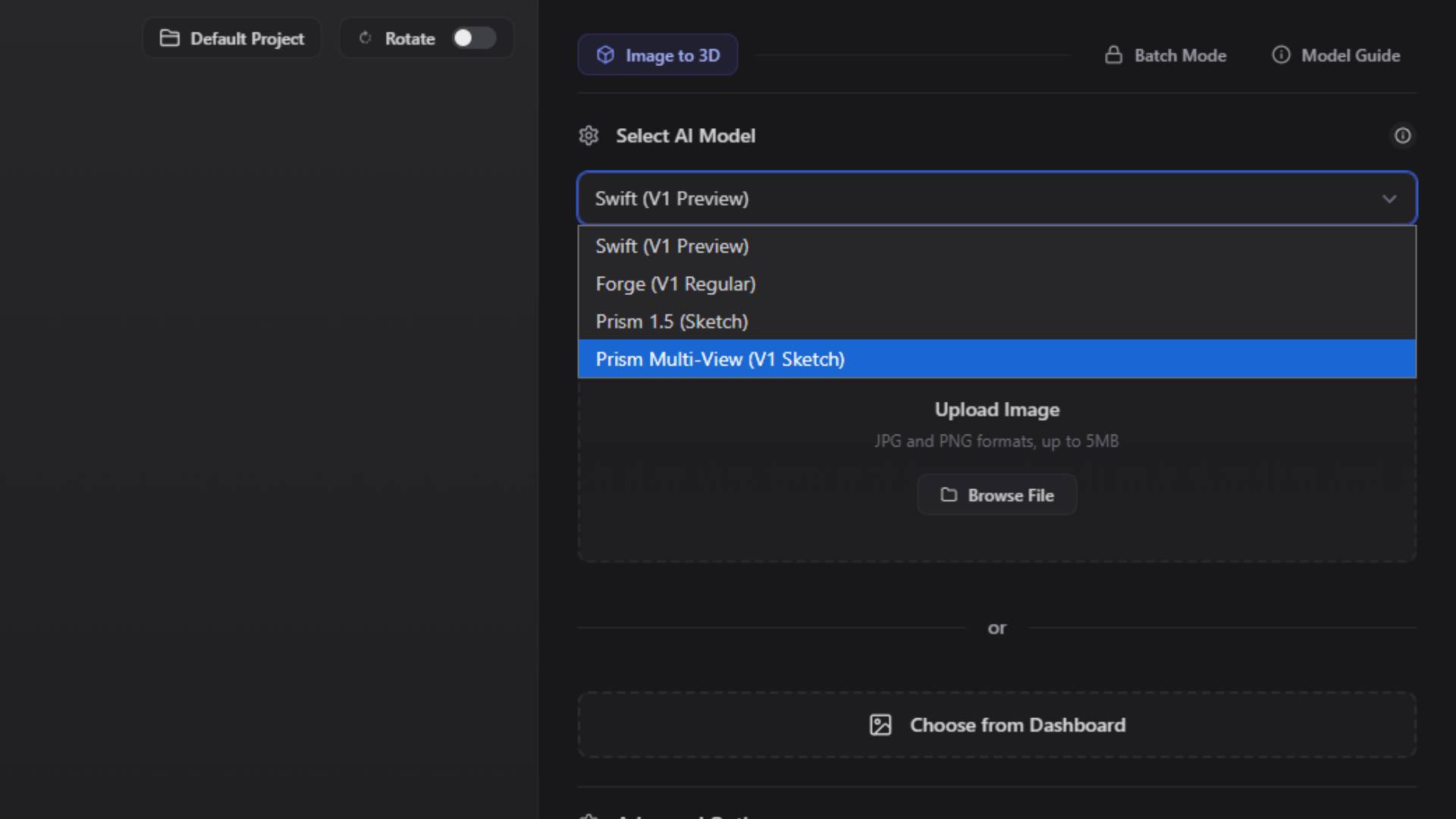

Model Selection and Generation Modes

Image to 3D offers the same trio of AI models found in Text to 3D, each tuned for a different balance between speed and precision. The Swift model delivers rapid results that are perfect for quick validation of ideas when visual fidelity is not the primary concern. Forge produces professional-grade geometry and full PBR textures at the cost of longer processing times. Prism edges slightly ahead in speed while maintaining the same high quality, making it the default choice for many commercial workflows.

Every model supports two distinct generation approaches. Single-image mode analyses one reference picture and extrapolates the missing dimensions to create a full 3D object. Multi-image mode accepts several photographs of the same subject from different viewpoints and reconstructs a more accurate model by triangulating information across those angles. Swift multi-view is ideal for concept validation when you have a few quick product shots, Forge multi-view pushes geometric accuracy even further and accepts up to five images, while Prism multi-view balances reconstruction quality with a four-image limit to keep processing times manageable.

The settings panel on the right adapts automatically when you switch between single-image and multi-image modes. Prompt and negative prompt fields appear for the Swift model just like they do in Text to 3D, giving you a way to steer overall style with minimal input. Forge exposes additional controls such as shaded versus PBR rendering, global quality levels, random seed entry for repeatability, optional bounding-box constraints for real-world dimensions, and an A-pose toggle that improves anatomical accuracy when generating humans or creatures. Prism extends these advanced controls further by offering independent seeds for geometry and textures, a face-limit value for balancing mesh density, toggles for texture generation and full PBR material output, and an auto-size feature that scales the model to realistic measurements based on context.

Working with Single-Image Mode

Single-image mode is the fastest way to move from a flat picture to a 3D asset. You can drag a file straight onto the upload area or browse your drive and select any standard format such as PNG or JPEG. The system analyses colour, lighting, and perspective cues to infer depth and structure. Because a single photograph cannot include information from every angle, the generated geometry sometimes needs light touch-ups, but for many static renders and concept visualisations the result is more than sufficient. Material fidelity improves dramatically when you choose Forge or Prism and enable PBR output, because these models generate normal, roughness, and metallic maps that simulate fine surface detail even when the base mesh remains modest.

Working with Multi-Image Mode

Multi-image mode shines whenever accuracy and completeness matter. The upload section expands to accommodate multiple thumbnails, and the system guides you to provide front, back, left, and right views in any order. Forge accepts five pictures while Prism stops at four, a deliberate design choice that prevents diminishing returns in processing time once enough angles have been captured. As soon as the last image finishes uploading, the generation button activates and the reconstruction pipeline fuses visual cues from every photograph into a single cohesive model. The resulting geometry usually requires far fewer edits than a single-image reconstruction, and the automatically generated textures line up correctly across seams because the engine has seen every side of the object.

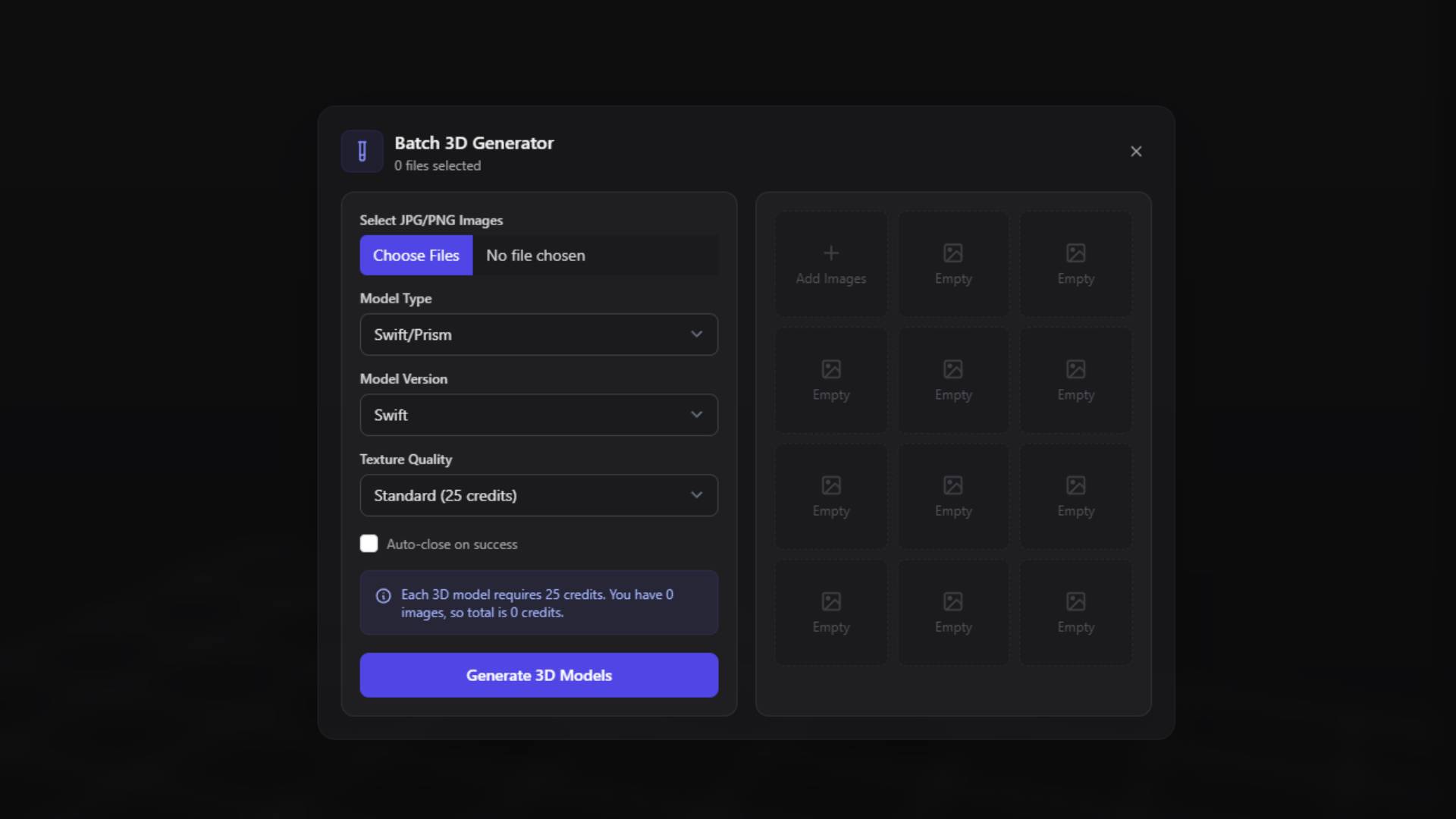

Batch Mode for High-Volume Generation

Professionals who need to convert entire photo sets can rely on batch mode. A discreet toggle at the bottom of the settings panel reveals a bulk uploader that accepts up to twenty images in one operation. Each file spawns its own generation job and the system processes them sequentially, dropping finished results into your default project as they complete. Batch processing removes the repetitive labour of uploading and clicking generate for every single picture, freeing you to focus on evaluating results rather than managing the interface. The real-time progress list shows which files are in queue, which are currently generating, and which are ready to download.

Integrating Image Studio for Maximum Control

While Image to 3D can handle a raw photograph just fine, creative teams often prefer to shape the source image first. The Image Studio provides a complete toolkit for that purpose. You can bring in a hand-drawn sketch, a rough render from another application, or a photograph straight from your phone, then use magic-edit, style-transfer, or sketch-to-image features to align colours, sharpen silhouettes, or introduce new design elements. Once the image looks exactly the way you want, a single click exports it to Image to 3D where geometric reconstruction begins. This two-stage process ensures the final 3D asset matches your artistic direction because you have already locked in the look and feel during the image editing phase. You can learn more about these powerful editing capabilities in the Image Studio guide.

Best Practices and Creative Tips

Achieving reliable, high-quality results comes down to controlling both the visual clarity of your reference images and the generation settings you choose. Well-lit photos with minimal motion blur give the AI more information to work with, and consistent backgrounds reduce the risk of stray geometry around the edges. Multi-image mode benefits from keeping camera distance and focal length similar in every shot, while batch mode runs most smoothly when files share consistent resolution and aspect ratio. Prompt fields remain valuable even in Image to 3D because descriptive words help the AI refine ambiguous areas that fall outside the frame. Recording successful seed numbers lets you revisit promising results later, and toggling PBR on in Forge and Prism ensures normal, roughness, and metallic maps export alongside geometry for seamless integration with real-time engines.

The combination of Image to 3D, Image Studio, and the broader 3D AI Studio ecosystem creates a flexible pipeline that adapts to rapid concept exploration as easily as polished final production. Whether you are converting a single photo into a quick prototype, reconstructing a product from a multi-angle shoot, or batch-processing an entire photoset for an e-commerce catalogue, the tools and techniques described in this guide provide the control you need to move from image to immersive 3D asset with confidence.

When you have finished reading, navigate back to the 3D AI Studio Dashboard (opens in a new tab) to start converting your own images or explore the advanced Text to 3D guide for an alternative approach based purely on descriptive language.